In a recent post, we explored how AI is transforming product development from design to deployment. But there’s another AI frontier reshaping the entire user-product relationship: agentic AI.

The AI evolution is accelerating beyond chatbots and personalized recommendations. Enter Agentic AI, a next-generation leap that moves artificial intelligence from reactive to proactive. For digital product teams, this shift is exciting and transformational.

What Is Agentic AI?

Traditional AI models respond to inputs: a user asks a question, and the AI answers. That’s reactive AI.

Agentic AI goes a step further, it exhibits autonomy, makes decisions based on goals, and takes initiative without being explicitly told what to do. In simple terms, it's an AI agent that can:

-

Understand objectives

-

Plan multi-step tasks

-

Act in dynamic environments

-

Adapt its behavior based on feedback

It’s the difference between asking for help and handing off a mission.

Real-World Example

Instead of asking a travel app to find you flights, imagine telling it:

"Plan a 5-day trip to Tokyo under $2,000, including flights, a hotel near Shibuya, and sushi spots locals love."

An agentic system wouldn’t only give you options, it would execute a series of tasks autonomously, delivering a complete itinerary, adapting to changes in real-time.

Why Product Teams Should Care

1. New Interaction Models: From Commands to Conversations

Agentic AI shifts interaction from command-based interfaces to goal-based collaboration. Users will call the shots rather than just press buttons and it will take care of the rest.

Implication: UX/UI must prioritize explainability, trust, and dynamic feedback loops. The interface is now a co-pilot.

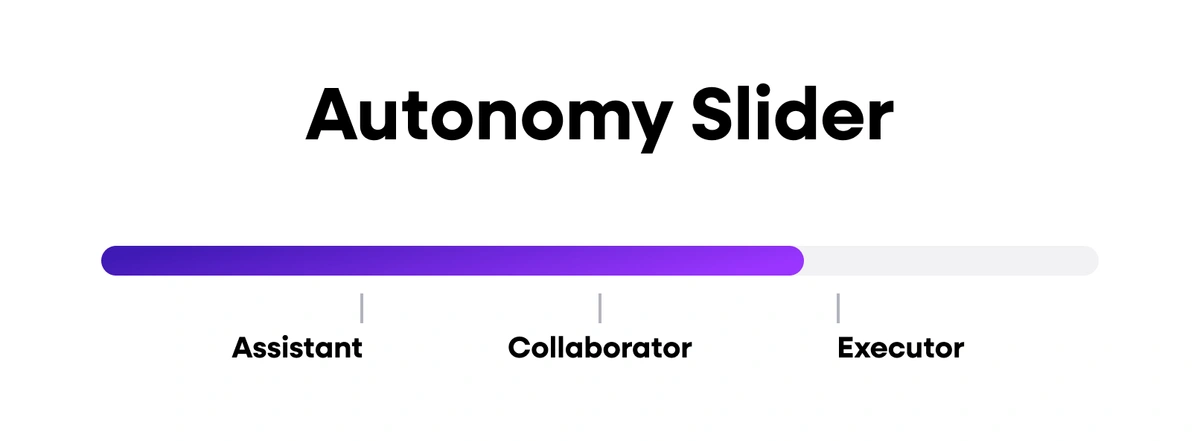

Karpathy's perspective (from "Software Is Changing (Again)"): The "autonomy slider" becomes a key UI principle where users should control how much autonomy they give to the system, from autocomplete to full agentic execution.

2. Product as an Intelligent Partner

Agentic products act more like collaborators than tools. They interpret context, make decisions, and self-correct.

Implication: Product managers must reimagine what it means for software to be “useful.” Features should now deliver outcomes, not only functionality.

Karpathy insight (from "Software Is Changing (Again)"): We’re entering Software 3.0, where prompts and goals (in natural language) replace hard-coded logic. Products must be designed to interface directly with LLMs while preserving user control.

3. Redefining MVP and Technical Roadmaps

Agentic AI changes the definition of an MVP. While defining features will still be important, the focus will be on intelligence and autonomy.

Implication: Roadmaps need to plan for graduated autonomy, from human-in-the-loop flows to near full agentic execution over time.

Karpathy’s take (from "Software Is Changing (Again)"): Think in terms of multi-modal LLM ecosystems, where orchestrating context, memory, and computation becomes part of your core tech stack, just like designing for an operating system in the 1960s.

4. New Development Paradigms

With LLMs, product development isn't solely coding anymore, it's also prompt engineering, context design, and dynamic orchestration.

Implication: Teams should be fluent in multiple software paradigms (1.0: code, 2.0: neural nets, 3.0: language prompts) and know when to use each.

Karpathy’s take (from "Software Is Changing (Again)"): The future of software is hybrid so your team must fluidly move between code, training data, and prompt-based instructions.

Challenges to Prepare For

Agentic AI isn’t plug-and-play. It introduces technical and human-centered challenges that demand intentional design:

1. Context Fragility & Memory Limitations

LLMs don’t persist knowledge across sessions like humans. They suffer from “anterograde amnesia,” forgetting past interactions unless context is explicitly engineered.

-

Action: Invest in robust memory management systems and session-aware design.

2. Verification & Oversight

LLMs can be fallible: hallucinating, misinterpreting, or overstepping bounds.

-

Action: Build UI/UX systems that allow users to easily audit, reject, or revise AI outputs. Visual diffs, change logs, and approval gates are crucial.

3. Security and Prompt Injection

LLMs are susceptible to prompt injection, data leaks, and malicious manipulation.

-

Action: Treat AI behavior like application logic: test it, sandbox it, and monitor for adversarial use.

4. Data Governance & Model Access

Agents require access to sensitive data to act intelligently but this introduces major compliance and trust issues.

-

Action: Collaborate with legal, data science, and infosec teams to establish strict access controls and explainability pipelines.

5. User Trust and Explainability

If users don’t understand why the AI did something, they’ll resist adopting it.

-

Action: Design for transparency and show the AI’s reasoning, references, and give users control over decisions. Autonomy without explainability is a UX liability.

What’s Next?

At Rapptr Labs, we believe agentic AI represents a paradigm shift, it won’t be a passing trend. We're already helping partners integrate these systems into digital experiences from healthcare to fintech.

Our recommendation:

Start small. Identify one flow where proactive intelligence could dramatically improve outcomes. Then build, test, and learn.

Final Thoughts

Agentic AI demystified is about rethinking how humans and digital products collaborate.

For forward-thinking product teams, the opportunity is massive: Design smarter, more intuitive systems that solve problems before users even ask.

The future of AI isn’t reactive.

It’s agentic.

And it’s here.